If you’re curious about the future of robotics and AI, Jon Morgan is the person to ask. A mechanical and robotics engineer, Jon has spent the last decade creating new technologies and bringing them to the global market, including military radar, autonomous vehicle sensors, police/fire robotics, and more. After founding an IoT startup and spending several years at QinetiQ North America, Jon headed to Mountain View to spearhead hardware projects at Google. (Have you heard of the Google Pixelbook? He helped launch it!)

If you’re still not impressed, Jon has an MBA from Babson College with a focus on Managing Technical Innovation and Growth and studied AI for business decision-making at MIT. He’s also a featured speaker at Product School, the world’s first technology business school. We’re thrilled to have him on the lineup at this year’s DIG SOUTH Tech Summit, where he’ll discuss how companies of all sizes can embrace AI, bots and machine learning to empower clients.

In the Q&A below, get a sneak peek at how hardware empowers AI, what trends he anticipates in the next few years, and the key factors every business should consider when thinking about AI.

Tell us more about your work on the Hardware team at Google.

I started as a Product Manager in Google’s hardware group when it was first launching about two years ago. Since then, I’ve been on the Laptop & Tablet team, focusing on launching products like the Pixelbook and Pixel Slate. We have been continually looking to expand the reach and influence of the Chrome OS platform to more markets, price segments and user experiences. Pixelbook and Pixelbook Pen brought industry-leading design as well as the industry’s lowest latency stylus to the ecosystem, and Pixel Slate emerged with the official launch of Chrome OS Tablet UI. It has been a very exciting time to be a part of the Hardware group as we grow at an outrageous pace.

You have a background in both mechanical and robotics engineering. From an engineering perspective, what interests you most about artificial intelligence?

AI has begun to reduce the barrier to entry for traditionally complex problem sets, from more conversational chatbots to autonomous vehicles and even just more intelligent sensor fusion for mobile robots. APIs from Tensorflow and other companies are making it easier to build out models; this not only encourages people to try different approaches, but companies are seeing the benefit in sharing their success with others. The amount of data being processed and learned from is creating better models that do not need to be trained by every individual company or individual.

We often think about AI in terms of software, but some technologists argue that the future of AI lies in hardware. Do you think that’s true?

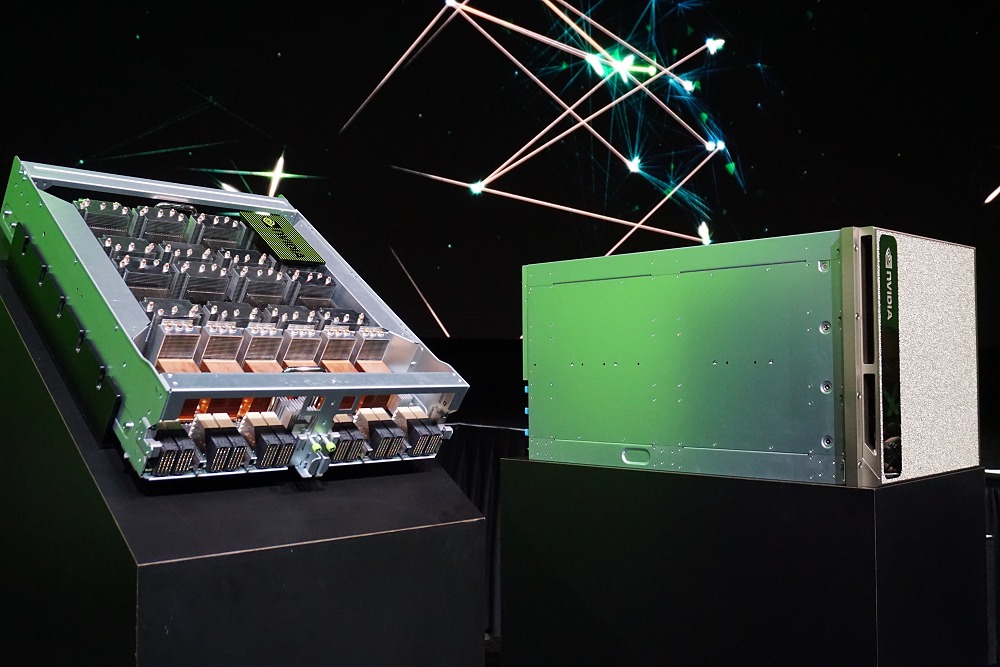

AI and massive data processing have to be accomplished somewhere. Since some of the first AI chips were released through Tensorflow, we are seeing manufacturers like Intel, Qualcomm and Nvidia bringing dedicated silicon to the marketplace. These packages bring advances in accelerators and GPUs to the forefront, from Nvidia’s DGX for cloud-based AI to the Jetson chip that is dedicated for autonomous vehicles and robotics. None of the crazy AI processing we wanted to start seeing in real-time would ever be possible without these hardware advances. Hardware also opens the door to bring device AI and machine learning into the world of enterprise use cases, where privacy is of top concern and corporations do not want their data going to the cloud.

What are some examples of AI hardware that you think will have a big impact?

The two pieces of AI hardware that I think have been and will continue to change the way we operate in our lives are Google’s Edge TPUs and Nvidia Jetson AGX Xavier platforms. These will open up new doors for on-device machine learning for enterprises, phones, robots and autonomous cars. They will empower IoT devices as small as your outlet to 18 wheels towing tons of cargo.

You’ve been involved in robotics for nearly a decade. What’s one of the biggest changes you’ve witnessed in the industry?

One of the biggest and most exciting changes that I have seen in the industry is the shift from Robotics being the entire point of a company, to robotics being a key piece of a bigger puzzle to solve real user needs. Enterprises and users are seeing that automation solutions will make their lives easier, from warehouse fulfillment, to autonomous cars, to drones…the list goes on. Robotics used to be this niche idea that only a small amount of people had access to and now anyone can build their own robot and positively impact a workflow they are doing today. There is no longer the fear that this is impossible.

When it comes to bots / machine learning, what trends do you anticipate in the next 5-10 years?

CRO taking prominent role. I believe that the idea of a Chief Robotics Officer will continue to grow. Robotics have become an ROI positive play for many companies but the integrations and understanding when to use robotics vs other options is a very complex conversation. The CRO will need to lead these conversations.

Semi-autonomous mobile vehicles will start to augment our current workflows. Everyone has seen how Amazon has transformed the warehouse of today with their introduction of Kiva robots. In the next 5-10 years, we will see our normal workflows being segmented in ways that we could not imagine, with portions being replaced by robotics solutions. From cooking, to delivering produce, servicing hotel rooms and so much more.

Assistant interaction. The last area of innovation will come in blending more HMI (human machine interaction) aspects into our daily assistants (Amazon Alexa, Google Home, etc). We are getting more used to talking to our phones or a speaker but I think that we will start to see more motion in that device and this emotional connection through motion will change not only what they can accomplish, but how we view them.

You studied AI for Business Decision-Makers at MIT — what are 1-2 key factors any company should consider when looking at AI as part of their strategy?

1. Make sure to understand the different approaches to solve the same problem, one being AI-based and one not, to make sure you are using the most efficient path. It is not always the AI path.

2. Be careful with user data and make sure that when you are curating data sets you are not overstepping the agreement you have made with your users.

If you could debunk one misconception about bots / AI, what would it be?

Robots are here to take your jobs. The goal of robots is to do things that otherwise would be dull, dirty or dangerous. They exist to help augment the work that skilled people can do, but the complexity of what we as humans can accomplish is amazing. While there may be a shift in where jobs are available, the increase in automation through robots will open up many new sectors of work, from manufacturing to transportation and beyond. Look back at the first time the car was introduced — people thought cars would eliminate jobs for those caring for horses, but in actually it created the entire transportation industry from manufacturing to mechanics to bridge builders and beyond.

Are you wondering, ‘How much should I be learning about AI when is it time to put it to work for my business?’ Don’t miss your chance to learn from experts like Jon Morgan during the DIG SOUTH Tech Summit! He joins us for a lively panel discussion that outlines how to incorporate AI and machine learning into you strategic planning and other processes to grow your company. Register now to reserve your spot!